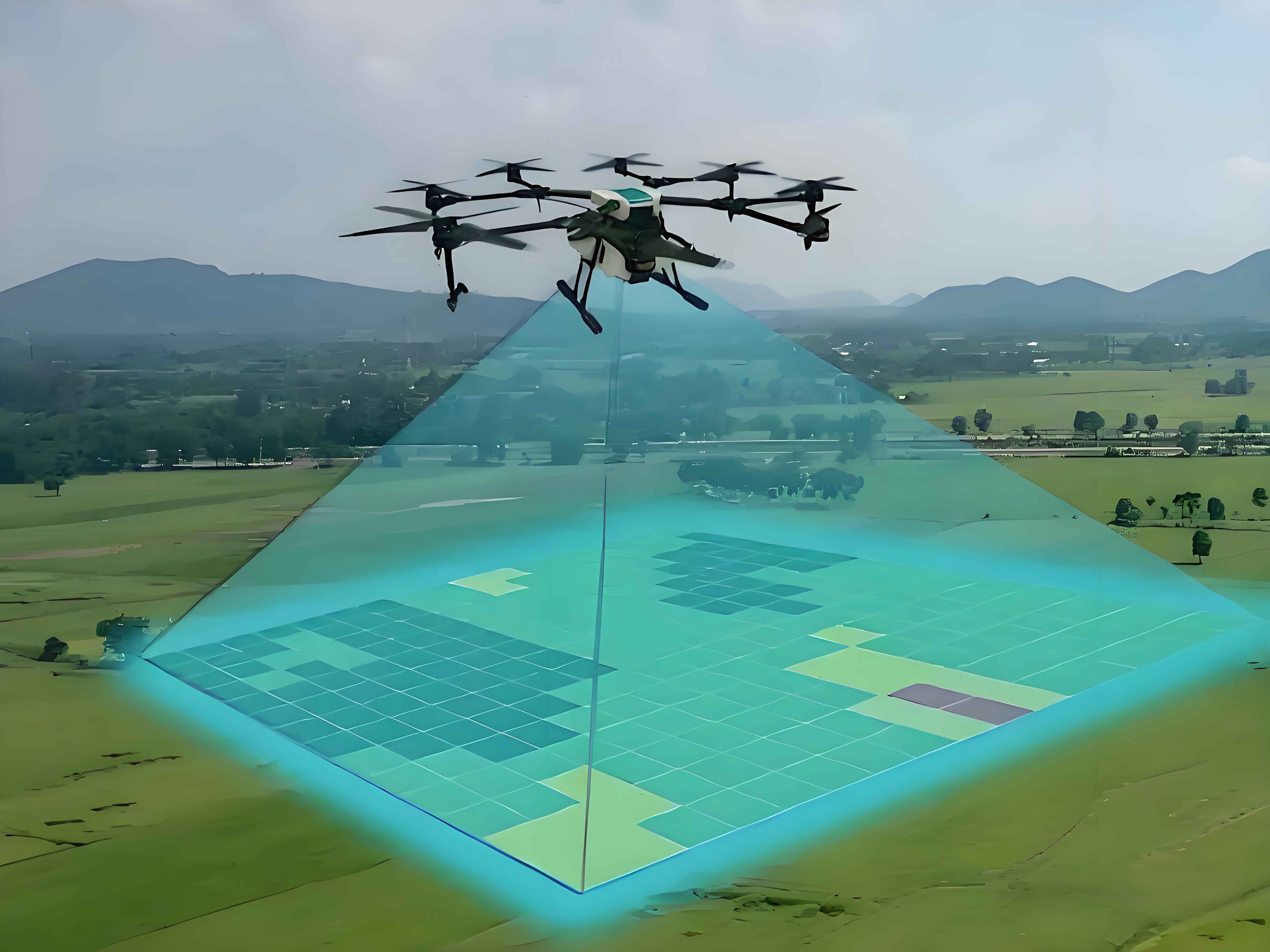

Surveying drones have revolutionized data collection across numerous industries, yet their proliferation introduces significant security vulnerabilities. Traditional detection methods relying on radar, RF signals, or acoustic sensors face limitations in cost, environmental interference, and reliability. Infrared imaging emerges as a robust alternative with long-range capabilities and noise immunity. However, detecting surveying UAVs in infrared imagery presents unique challenges: targets occupy minimal pixels (often 3-5 pixels), thermal signatures diminish with distance, and complex backgrounds create severe foreground-background imbalances. These factors make infrared small UAV detection exceptionally demanding, as evidenced by the 3D visualization showing weak thermal signatures blending with background noise.

Our Feature Enhancement Network (FENet) addresses these challenges through a tailored encoder-decoder architecture. The core innovation lies in the Multi-scale Feature Attention (MSFA) module, which adaptively preserves target details across scales. MSFA integrates three components: channel attention (CA), dilated spatial attention (DSA), and a Multi-Scale Convolution Block (MSCB). The MSCB processes features using depthwise separable convolutions at multiple kernel sizes (3×3, 5×5, 7×7) followed by channel shuffling to enhance cross-channel communication:

$$ \text{MSCB}(x_i) = \text{PW}_{1\times1} \left( S_g \sum_{k \in \{3,5,7\}} \text{DW}_k (\bar{x}_i) \right) $$

where \( \bar{x}_i = \text{PW}_{1\times1}(x_i) \) expands channels, \( \text{DW}_k \) denotes depthwise convolution, and \( S_g \) is channel shuffle. DSA then captures spatial relationships using parallel dilated convolutions:

$$ \text{DSA}(x_i) = x_i \cdot \sigma \left( \text{concat}(y_1, \dots, y_r) \right) $$

where \( y_r \) represents outputs from dilation branches. The full MSFA formulation combines these elements:

$$ \text{MSFA}(x_i) = x_i + \text{DSA}(\text{MSCB}(x_i)) \odot \text{CA}(\text{MSCB}(x_i)) $$

To counteract information loss in deep layers, we implement Low-level Feature Distribution (LFD) and Local Context Channel Attention (LCA). LFD propagates shallow features containing high-frequency details to deeper layers, while LCA dynamically adjusts feature fusion weights:

$$ W_{\text{LCA}} = x \cdot \sigma \left( \text{Conv}(y_{\text{avg}}, k) \right) $$

where \( y_{\text{avg}} \) is global average pooling output and \( k \) is adaptively sized kernel. For the extreme foreground-background imbalance in surveying UAV datasets, we design a Scale and Location Sensitive (SLS) loss:

$$ \mathcal{L}_{\text{SLS}} = \mathcal{L}_S + \mathcal{L}_L = \left(1 – \omega \frac{|A_p \cap A_{\text{gt}}|}{|A_p \cup A_{\text{gt}}|}\right) + \left(1 – \frac{\min(d_p, d_{\text{gt}})}{\max(d_p, d_{\text{gt}})} + \frac{4}{\pi^2}(\theta_p – \theta_{\text{gt}})^2 \right) $$

where \( \omega \) scales loss by target size discrepancy \( \delta = | |A_p| – |A_{\text{gt}}| | \), and distance/angle terms penalize center misalignment.

| Models | Anti-UAV410 | IRSTD-1K | ||||||

|---|---|---|---|---|---|---|---|---|

| mIoU (%) | Pd (%) | Fa (×10-6) | mAP (%) | mIoU (%) | Pd (%) | Fa (×10-6) | mAP (%) | |

| YOLOv8 | 43.32 | 87.06 | 1278.78 | 78.43 | 22.43 | 54.01 | 93.01 | 10.06 |

| MSDANet | 41.39 | 88.44 | 176.42 | 84.98 | 46.61 | 59.39 | 44.26 | 47.27 |

| MSHNet | 43.41 | 87.83 | 114.64 | 85.48 | 67.37 | 83.18 | 64.45 | 73.86 |

| FENet (Ours) | 44.13 | 89.29 | 106.96 | 86.95 | 68.48 | 84.43 | 62.36 | 75.54 |

Comprehensive evaluations on Anti-UAV410 and IRSTD-1K datasets demonstrate FENet’s superiority. As shown in Table 1, our model achieves state-of-the-art performance with 44.13% mIoU and 86.95% mAP on Anti-UAV410, outperforming MSDANet by 1.97% mAP while reducing false alarms by 30.16%. For surveying UAV detection, FENet’s precision-recall balance is particularly crucial given the security implications of missed detections. Ablation studies confirm each module’s contribution:

| Components | Performance Impact | |||

|---|---|---|---|---|

| MSFA | LFD&LCA | SLS Loss | mIoU (%) | Pd (%) |

| ✗ | ✗ | ✗ | 42.20 | 87.55 |

| ✓ | ✗ | ✗ | 43.16 (+0.96) | 87.85 |

| ✗ | ✓ | ✗ | 43.35 (+1.15) | 88.96 |

| ✗ | ✗ | ✓ | 43.23 (+1.03) | 86.44 |

| ✓ | ✓ | ✓ | 44.13 (+1.93) | 89.29 |

The MSFA module alone boosts mIoU by 0.96%, while LFD&LCA provides 1.15% gain by recovering lost details. The joint SLS loss improves shape sensitivity critical for small surveying UAVs. When fully integrated, FENet achieves optimal balance between precision (89.29% Pd) and false alarm suppression (106.96×10-6 Fa). This efficiency makes it suitable for real-time surveying drone monitoring systems where computational resources are constrained.

Our approach fundamentally enhances infrared UAV detection through three innovations: First, MSFA’s multi-scale processing counters MTF-induced high-frequency attenuation in thermal sensors. Second, LFD-LCA synergy maintains target integrity across network depths—essential for distant surveying UAVs with sub-10-pixel signatures. Third, the physics-informed SLS loss directly addresses infrared-specific challenges like low signal-to-clutter ratios. Future work will explore onboard deployment for autonomous surveying drone security systems and multi-spectral fusion. As UAV adoption expands, such advancements will become increasingly vital for safe airspace integration.